Blackhole Sink Pattern for Blue-Green Deployments

Simple approach for upgrading stateful streaming applications.

Blue-green deployment is a well-known technique for deploying applications, predominantly stateless services or web applications. Modern orchestration tools like Kubernetes make it extremely easy to use, so we don’t even think too much about the way applications are deployed nowadays.

However, when it comes to stream-processing applications, specifically stateful ones, this model is still not used widely. And there is a reason for that: because of state, bootstrapping a new version of an application may take minutes or even hours. Also, standard tools rely on some sort of healthcheck to make sure the new version is healthy and should not be rolled back; stream-processing applications rarely provide such mechanisms.

But zero-downtime deployments are too good to avoid! So, let’s see what exists today. I’ll primarily focus on solutions for Apache Flink, but they should also be applicable to Apache Spark, Kafka Streams and other streaming technologies.

Zero-downtime deployments at Lyft

Several years ago, Lyft explained how they perform zero-downtime blue-green deployments in this Flink Forward talk. They have a configuration store that’s used to specify which of the versions (“blue” or “green”) is the primary one. In this model, both versions could be emitting data at the same time (which means duplicates). That’s why downstream consumers should use the configuration store to only process data from the current primary version.

Initially, many actions were manual, e.g. deciding if the new version is healthy, terminating the old one, etc. Later, this workflow was contributed to Lyft’s Flink Kubernetes operator. The operator does automate many of these actions, but it doesn’t solve the primary issue with this approach: duplicates.

Blackhole Sink Pattern

In my opinion, a simpler and more generic way to perform a blue-green deployment in the case of streaming applications is what I call a Blackhole Sink Pattern (named because of the Flink Table API connector).

In this scenario, you also have multiple versions of the same application running, but, in addition, you have a way to switch all sinks to “blackhole”, “no-op” sinks.

So, the end-to-end workflow looks like this:

bootstrap a new version of the application (either from scratch or by using a savepoint) with blackhole sinks.

wait until it’s fully caught up and ready.

terminate the previous version and replace blackhole sinks with real sinks by redeploying the current version.

The latter part can be tricky: ideally, you want to perform it atomically. One way to do this is to provide a timestamp for both versions that’s used to perform the switch: blue job stops emitting data at timestamp X (by enabling blackhole sinks), and green job starts emitting data at timestamp X (by disabling blackhole sinks). Instead of using timestamps (which are never reliable in distributed systems), you can use something a bit more deterministic, like the Kafka topic offsets.

Finally, you can choose the amount of automation to implement for this workflow: everything can be done manually to start, then you can introduce tools to perform the switch or to orchestrate the whole flow.

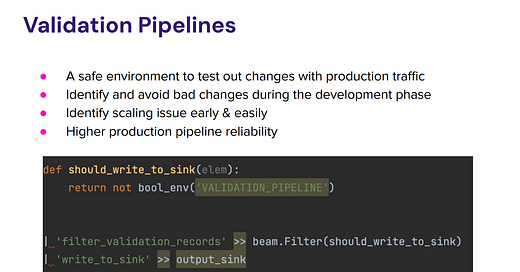

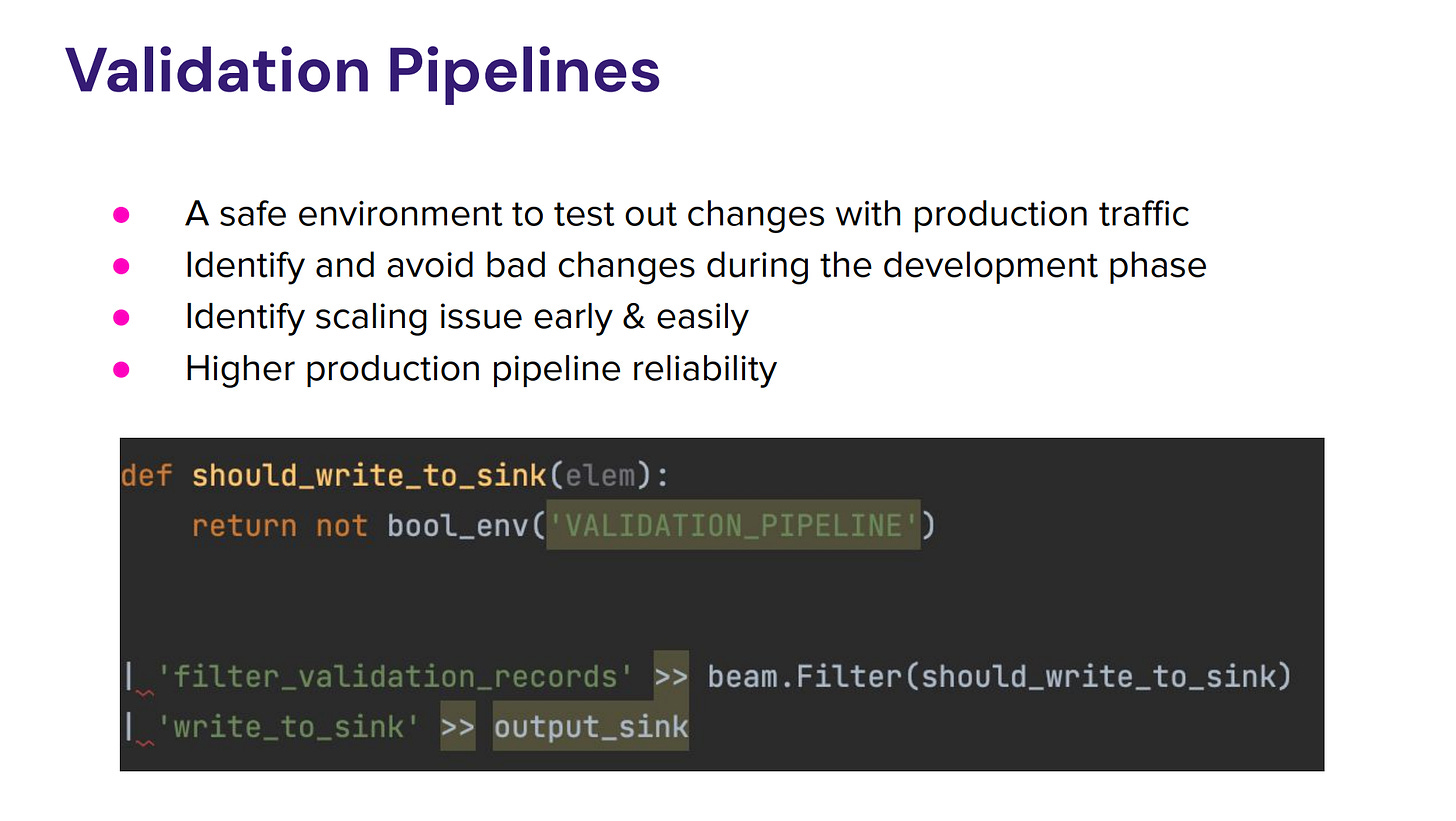

It’s quite interesting that Lyft ended up using exactly the same approach for what they call “validation pipelines” (presented at Current 2023):

Gotchas

Of course, there are several gotchas with this approach:

Watch out for other types of outputs. For example, it may be easy to disable a Kafka sink, but you may not know that the application also explicitly creates a schema in a Schema Registry (which may not be what you want; I accidentally broke things in production because of that once 😅).

Data sources should allow another concurrent consumer. Typically, it is not an issue when consuming from Kafka, but this can be hard when using messaging queues (e.g. SQS). Also, enough capacity should be dedicated to maintain multiple consumers.

Observability can be challenging. From the metrics and logs perspective, do you have a single job with two versions or two distinct jobs?