Flink Forward conference happened last week in Seattle, Washington. It’s the main Apache Flink event which includes many practitioners from companies like Netflix, Apple, Stripe, Lyft, etc.

Talk recordings are not yet available, but they will be published on Flink Forward’s YouTube channel.

Ververica Announcements

Ververica announced that their Cloud offering is now GA! I admit I didn’t have a chance to try it out yet, but I will soon.

They also announced going “Open Core” with their Ververica Runtime Assembly (VERA) Flink runtime, which, they claim, could be up to 2x faster than open-source Apache Flink.

It seems like most of the improvements come from a custom state backend - GeminiStateBackend. If I remember correctly, a custom state backend was one of the first things Immerok built before being acquired. I feel like the standard Flink’s RocksDB state backend has ok performance but can be optimized a lot. However, both Ververica and Confluent already have proprietary state backends, so they’re not incentivized to improve the open-source one.

By the way, it’s very notable how much Ververica and Confluent compete with each other right now: Ververica Cloud is now GA, and managed Flink in Confluent Cloud will be GA next year. Both companies run Flink-centered events (Flink Forward vs Current), and both provide educational courses / training. Yes, Confluent has many customers already using Kafka, so selling Flink (especially Flink that’s well-integrated into the platform) is straightforward, but Alibaba (Ververica’s parent company) has a $200B+ market cap… So, this is going to be interesting 🍿.

Stream and Batch Unification

Stream and Batch unification was a hot topic during the event: it was mentioned during the opening keynote several times, and there were a few talks going deep into the unified architecture and even batch (!) improvements.

Ververica presented its Streamhouse vision again. It’s primarily based on Apache Paimon, which is still not that popular compared to the other Lakehouse formats. Things may change with an upcoming book.

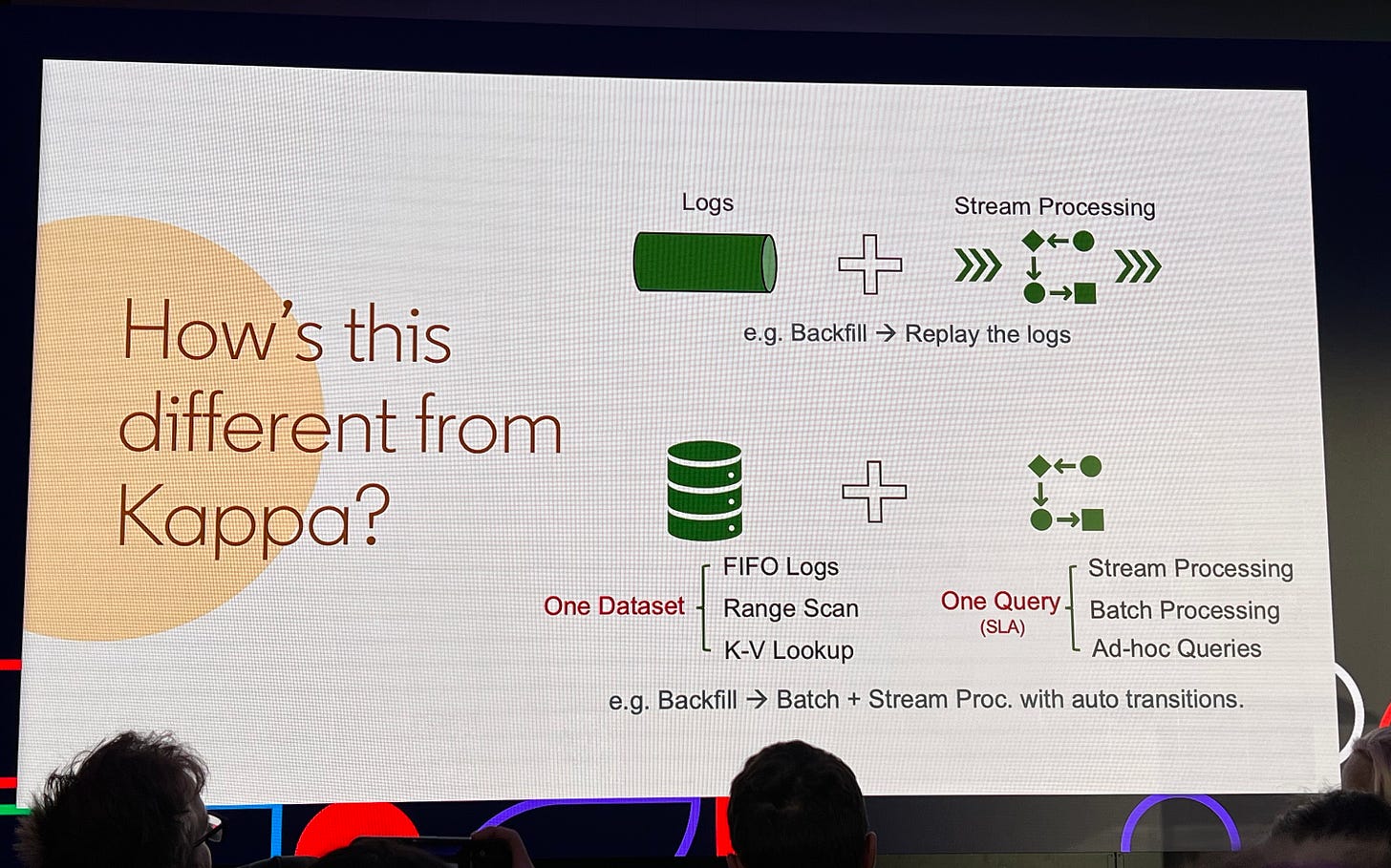

I was actually more impressed with LinkedIn’s take (presented by Jiangjie Qin) on stream and batch unification. It’s worth watching the talk, but the most interesting bit that I wanted to highlight is this:

They talked about having datasets that can support different types of queries, for example:

A dataset as FIFO logs for stream processing.

The same dataset supporting range scans for batch processing.

The same dataset supporting key-value lookups for ad-hoc querying.

This deeply resonates with the Consolidated Data Engine idea I covered a few months ago in this newsletter.

They also shared how they want to use Apache Beam as a way to unify batch and streaming engines. This is where we disagree. Apache Beam is a good framework for authoring Google Dataflow pipelines, but I would not recommend it as a layer on top of Apache Flink or Apache Spark. Why? Beam needs to be able to support a variety of technologies, so instead of doing one thing really well, it does several things just ok. When using Beam, you can’t leverage any of the more sophisticated features; you have to accept doing less. I remember Beam didn’t support user-provided state in Session Windows for years, for example.

What should you use instead? If you really don’t want to support multiple frameworks, just choose one. The latest Flink version has pretty decent batch support, and things will be even better in 2.0. Spark, with the latest Project Lightspeed improvements, can also satisfy many streaming requirements (unless you need sub-second latency).

YAML Pipelines

I first noticed this trend a few months ago: almost every Big Tech company providing some form of managed Flink offering represents a Flink pipeline in a YAML config file. I’ve seen examples from LinkedIn and Lyft before. Apple and DoorDash showed their versions at Flink Forward.

This makes a lot of sense: GUIs for creating data pipelines only look good in demos. Engineers need to be able to put a pipeline config in version control, run CI/CD, etc. Kubernetes operators make it very easy to only expose a high-level declarative configuration to the end users, doing all the heavy lifting.

I also noticed that many of these YAML configurations address very specific use cases, e.g. building ML features in Lyft’s case.

And we’re doing the same thing at Goldsky 😉.

Other Talks

Since recordings are not yet available, I’ve only watched several talks during the event, but there are a few that I can recommend:

Kubernetes-like Reconciliation Protocol for Managed Flink Services from Sharon Xie at Decodable. It was a fantastic talk showing the evolution of their architecture. It resonates a lot with me as a person who designed a similar system.

Streaming from Iceberg with Flink: An Apple Maps Journey from Sergio Chong Loo at Apple. Streaming from Iceberg may sound weird, but Sergio makes a strong case.

Enhancing Flink Streaming Applications: Synchronous API for Financial Risk Mitigation from Jerome Gagnon at Wealthsimple. With Queryable State going away in Flink 2.0, what are the options to perform any kind of Synchronous API call for Flink state? Obviously, you can write your data to a fast key-value store and query that, but Jerome presented another idea: using a Request-Reply pattern.

Flink SQL: the Challenges of Implementing a Modern Streaming SQL Engine from Jark Wu at Alibaba Cloud. A great explainer about all the things that can go wrong with Flink SQL. Probably nothing new for people who have to deal with Flink SQL regularly.

New Content

I’ve recently published a blog post about data streaming for blockchain data.